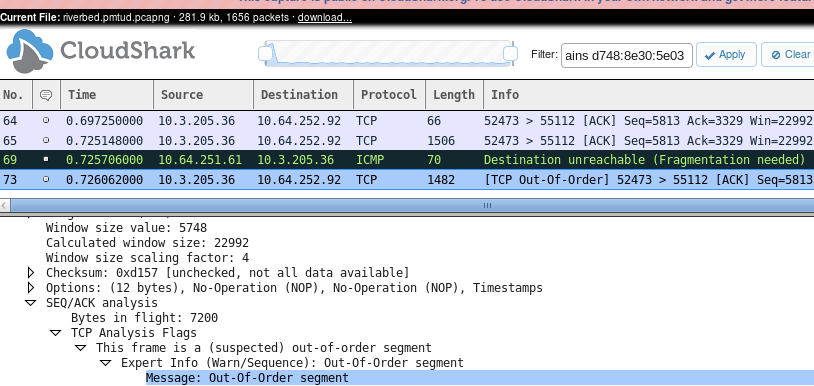

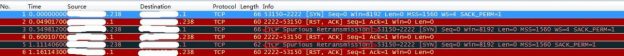

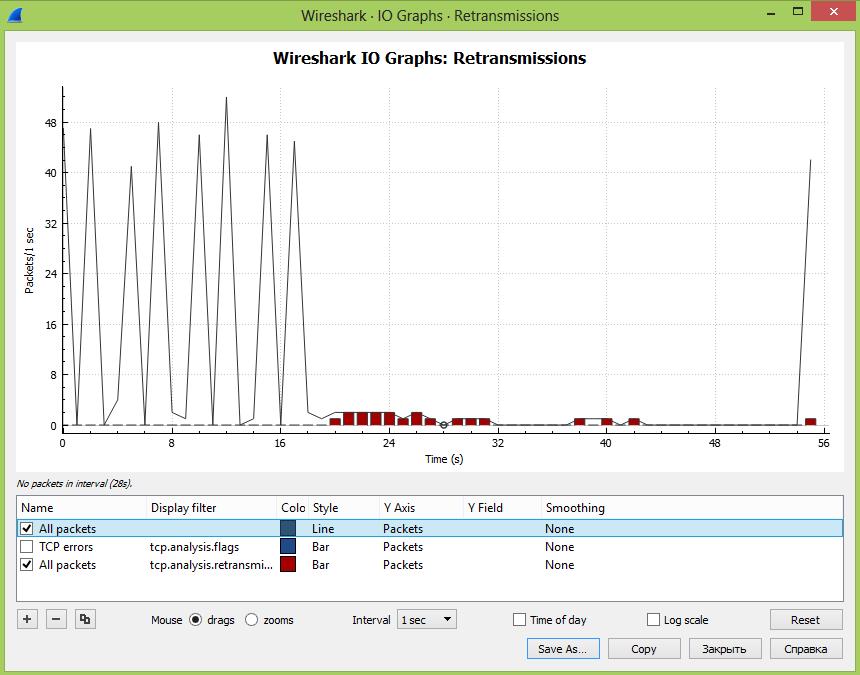

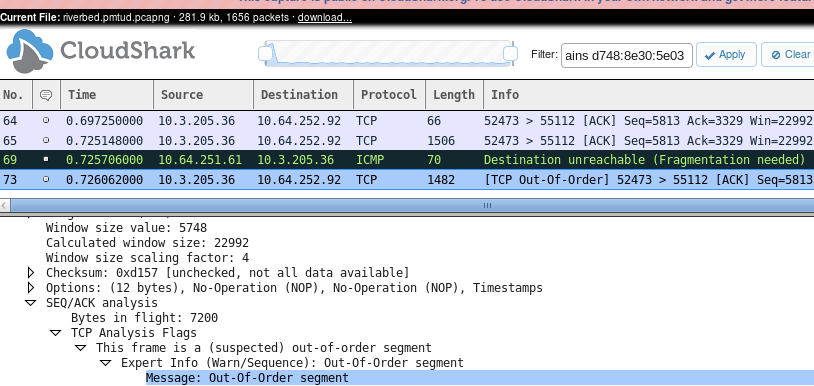

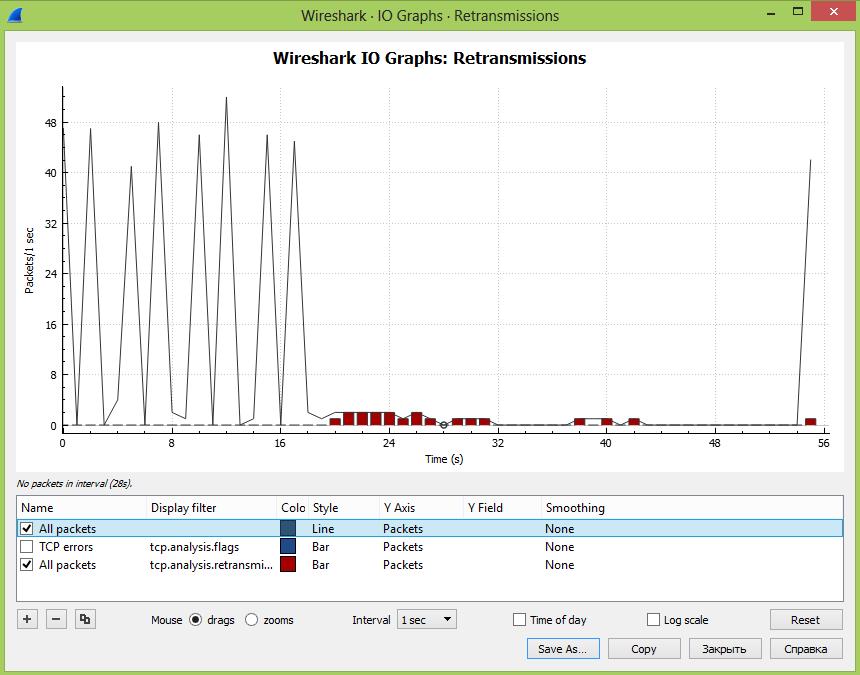

This may indicate wireless or cabling issues. Look for ethernet errors, discards, possible high utilization. Look at the path between devices and your server. If these are common, they may start to impact application and/or performance across your network. TCP will show some packet loss, so these are normal events. These packets may indicate that the sender sent a retransmission for data that was already acknowledged. You’ll see the Wireshark main screen change to the point in time you are clicking.Īnalyze the content and look for Spurious Retransmission. Click on Statistics, IO Graphs and click throughout the graph on the color that indicates TCP errors. How do you see them? Capture some data with Wireshark on your workstation or server. a/spray/src/main/resources/nfĪ/spray/src/main/scala/spray/examples/BenchmarkService.scalaī/spray/src/main/scala/spray/examples/BenchmarkService.scalaĪ/spray/src/main/scala/spray/examples/BenchmarkService.TCP Spurious Retransmissions may be seen from time to time when using Wireshark. The diff of changes we made to the benchmark app:ĭiff -git a/spray/build.sbt b/spray/build.sbtĭiff -git a/spray/src/main/resources/nfī/spray/src/main/resources/nf We have graphs showing that the slow requests are taking 3 or 9 seconds to complete, which is consistent with TCP backoff times when a connection attempt fails. I think this proves that the system isn’t accepting TCP (“ times the listen queue of a socket overflowed”). Overflow” events, as reported by “ netstat -s” Response times (starts low, increases when the TCPĬonnection issue manifests after around 10:20): In these graphs, the test starts at 10:00, and takes aboutĢ0 mins to ramp up the load, reaching 1200 connections/sec at around 10:20. If anyone has any ideas about what might be causing thisīottleneck, or any suggestions on relevant stats we could gather, they would be I have included some relevant graphs below, as well as theĭiff of changes we had to make to the benchmark app (nothing significant). Limits on the maximum number of open file handles.

This may indicate wireless or cabling issues. Look for ethernet errors, discards, possible high utilization. Look at the path between devices and your server. If these are common, they may start to impact application and/or performance across your network. TCP will show some packet loss, so these are normal events. These packets may indicate that the sender sent a retransmission for data that was already acknowledged. You’ll see the Wireshark main screen change to the point in time you are clicking.Īnalyze the content and look for Spurious Retransmission. Click on Statistics, IO Graphs and click throughout the graph on the color that indicates TCP errors. How do you see them? Capture some data with Wireshark on your workstation or server. a/spray/src/main/resources/nfĪ/spray/src/main/scala/spray/examples/BenchmarkService.scalaī/spray/src/main/scala/spray/examples/BenchmarkService.scalaĪ/spray/src/main/scala/spray/examples/BenchmarkService.TCP Spurious Retransmissions may be seen from time to time when using Wireshark. The diff of changes we made to the benchmark app:ĭiff -git a/spray/build.sbt b/spray/build.sbtĭiff -git a/spray/src/main/resources/nfī/spray/src/main/resources/nf We have graphs showing that the slow requests are taking 3 or 9 seconds to complete, which is consistent with TCP backoff times when a connection attempt fails. I think this proves that the system isn’t accepting TCP (“ times the listen queue of a socket overflowed”). Overflow” events, as reported by “ netstat -s” Response times (starts low, increases when the TCPĬonnection issue manifests after around 10:20): In these graphs, the test starts at 10:00, and takes aboutĢ0 mins to ramp up the load, reaching 1200 connections/sec at around 10:20. If anyone has any ideas about what might be causing thisīottleneck, or any suggestions on relevant stats we could gather, they would be I have included some relevant graphs below, as well as theĭiff of changes we had to make to the benchmark app (nothing significant). Limits on the maximum number of open file handles.

TIME_WAIT issues including TCP port exhaustion.We have already eliminated the following possible Of HTTP requests, using a new TCP connection for each request. I can’t upload the test harness here, but it just makes lots So there is some bottleneck preventing Spray from accepting TCP connections fast enough.This is even though the CPU on the machine is only at 15-20%.When load exceeds around 1200 connections/second, the app starts to take a long time to reply to new TCP connections.We have reproduced the issue using the Spray “benchmark” app

0 kommentar(er)

0 kommentar(er)